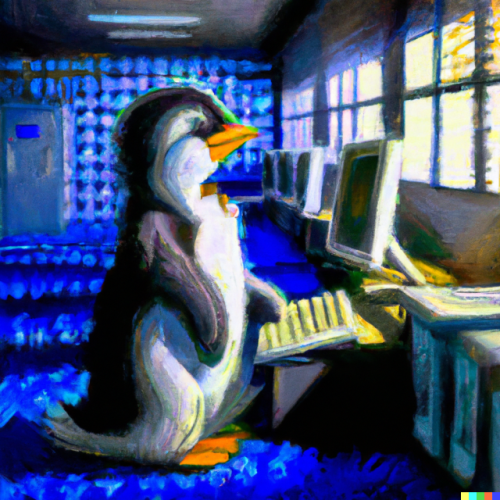

The OpenAI group is making a big splash with websites like Dall-E 2 and ChatGPT. The other night I asked Elijah for the basic plot of a movie and had Chat GPT write the script, write the lyrics for a theme song, and then tell me which voice actors would fit the roles. Then I had Dall-E 2 make a movie poster for it. For this blog post, I asked Dall-E 2 to draw “a van Gogh style painting of a cute penguin sitting in a datacenter typing on a computer” and you see the result on the right.

Experiences like this have led to a lot of conversations about how people feel about it and how we should manage these AIs. It seems like many of the podcasts I follow have spent episodes on this topic and I end up getting bored and frustrated. There are three main points I always wish they would realize:

- This isn’t new. Sure, these two specific examples (Dall-E 2 and ChatGPT) are very popular in the news right now. Lots of people are getting introduced to AI in a way they can readily understand, but AI has been around for a long time in many incarnations. AI isn’t one specific thing, but the term appears to have originated around 1956. The range of what people call “AI” is enormous. At some level, you can think of it as “math.” AI doesn’t only mean a sentient robot that’s going to exterminate human life. It’s math that makes things more efficient in your daily life. Here are some examples:

- A grocery store deciding how to place items in their aisles

- Lane keeping technology in cars

- Smart speakers

- Junk mail filtering

- Motion sensors differentiating between people and shadows

- Online advertising

- Bank fraud detection

- Video game opponents

- Social media feeds

- Filters on your camera phone

- It doesn’t matter whether you like AI or not. It doesn’t matter whether you think there should be rules for its use. That’s like saying there that you need to control the dangerous implications of addition. Anybody on the planet can click a few buttons and spin up “AI” to do any number of built-in tasks on a cloud service like Azure or AWS, and there are plenty of people that have the skills to code things for themselves on top of other platforms or from scratch. We can’t control it like we try to control nuclear weapons material and knowledge.

- The technology is progressing faster than you can imagine. I’ve written before about how hard it is for humans to grasp exponential growth, and this is another place where that comes into play. Think about your tech life today (phone, internet, etc) compared to 2000 when we thought flip phones were amazing and iMacs were the hot product from Apple. We’ve come an incredibly long way in 20 years. Not only is it hard/impossible to imagine what another leap of that size looks like, but it’s going to happen in ~10 years this time. And then the same leap will happen in 5 years, then 2 years, etc. So if you think ChatGPT is impressive today, don’t turn your back for too long before you try it again.

So yes, these new technologies are impressive, but for people who work with this stuff every day, the reaction is “Hey, good job. That’s impressive.” It’s not the “WE’RE ALL GOING TO DIE! LOOK WHAT HAPPENED OUT OF THE BLUE!” story that you get from the media. It’s almost like you shouldn’t rely on things like TV/cable news for your news…